What’s Seattle’s Moderate slate in 2023? Learn where Seattle City Council candidates stand on public safety, addiction and homelessness.

Read MoreAuthor: Steve Murch

Supreme Court Affirms Free Expression in 303 Creative Case

“The First Amendment’s protections belong to all, not just to speakers whose motives the government finds worthy.” My nomination for Best and Perhaps Most Important Sentence of the Decade.

Read MoreHow to Add NPR or CBC’s Headlines Back Onto Your Twitter Timeline

Do you miss NPR’s news on your Twitter feed? Simply follow @NPRbrief on Twitter to get the headlines.

Read MoreCircling Back to “Don’t Discount Lab Leak Hypothesis”, Part II

Part II of “Circling Back to Lab Leak Hypothesis”

Read MoreCircling Back to a “Don’t Discount the Lab Leak” Post of January 2020

In January 2020, I posted my view that the outbreak of what was to be called COVID quite possibly came by way of lab accident. It generated over 100 comments and ended at least one friendship. I popped by Facebook yesterday to circle back on that thread.

Read MoreUploading Images on Paste in Typora

Do you use Wordpress? Consider using Typora and the free image uploader “upgit” to make embedding images automatic.

Read More#TwitterFiles: The Complete List

An index of all the Twitter Files threads, including summaries.

Read MoreWait, Twitter Knew The “Russian Bot” Narrative Was Fake… For Five Years?

In the most explosive Twitter Files yet, Matt Taibbi uncovers the agitprop-laundering fraud engineered by a neoliberal think-tank.

Read MoreWorking with Environment Variables (Tech Note)

Here’s a quick cheatsheet on setting and reading environment variables across common OS’s and languages.

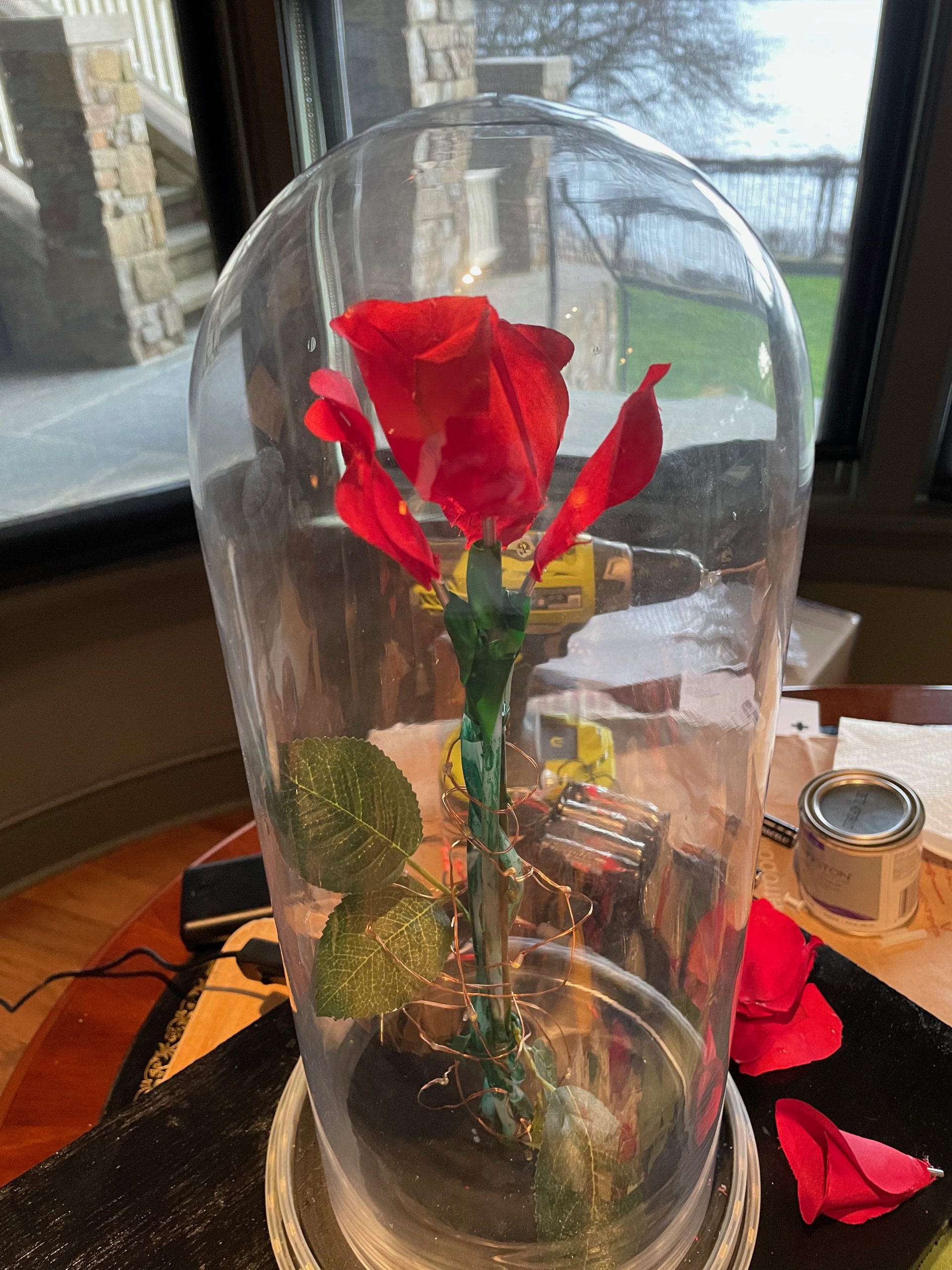

Read MoreEnchanted Rose 2.0 with Falling Petals

Revisiting the Enchanted Rose project four years later, this time with an open-source build based on Raspberry Pi.

Read More