We Need to Talk About The Crisis in Young American Men

There’s a conversation we’re not having. How are the young men doing in your life?

There’s a conversation we’re not having. How are the young men doing in your life?

The department of Health and Human Services is dropping the requirement that hospitals report daily COVID deaths, yet adding a bunch of pediatric metrics which will make pediatric problem look much larger.

This is new for a whole lot of Americans. The last time inflation was this high, you hadn’t ever heard of the term “e-mail.”

A new study from the UK suggests that children are not, in fact, significant vectors of COVID risk.

Evidence is growing that we may be overstating COVID positives, lumping in those whose immune system has already defeated the virus.

In the urgent debate around Seattle’s homelessness crisis, many articles (such as this otherwise great one in Crosscut) cite the statistic that 35% of those who are homeless in the Seattle region have some level of substance abuse. It’s often a very central part of the framing, especially by those who wish to portray substance…

Last night, an hour-long program aired without commercial interruption in Seattle on the addiction crisis and homelessness. It’s an important watch. I found it devastating, riveting and motivating, all at once. There is already much being made over the fact that (a) it comes from KOMO News, a station now owned by Sinclair Broadcasting, a…

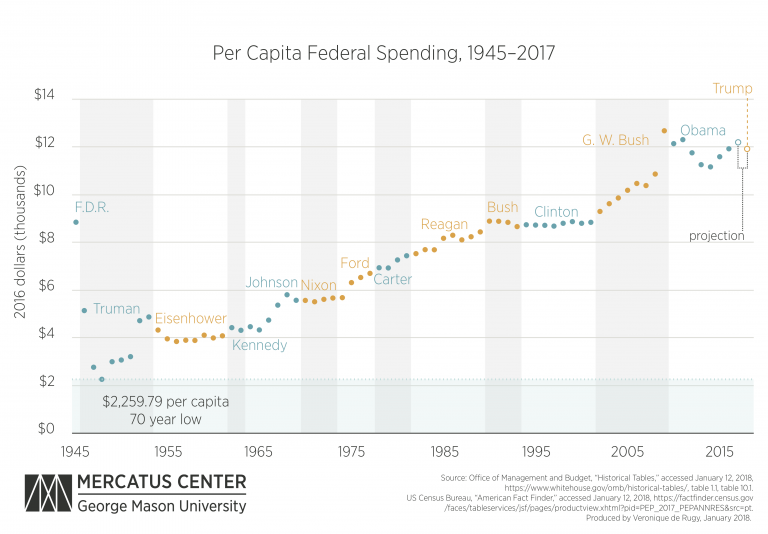

The Washington Post’s Jeff Stein estimates that raising the marginal tax rate to between 60 and 70 percent on incomes above $10 million might raise as much as $720 billion dollars over a decade, or $72 billion per year. There are some 16,000 households that meet that criteria — fewer than 0.05% of all US…

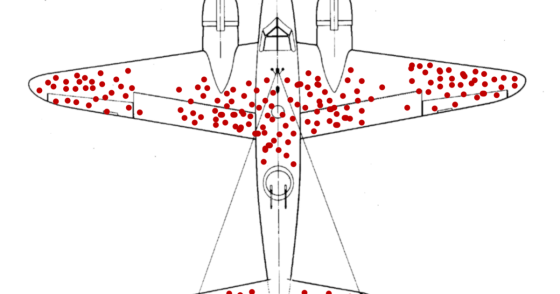

In WWII, researcher Abraham Wald was assigned the task of figuring out where to place more reinforcing armor on bombers. Since every extra pound meant reduced range and agility, optimizing these decisions was crucial. So he and his team looked at a ton of data from returning bombers, noting the bullet hole placement. They came…

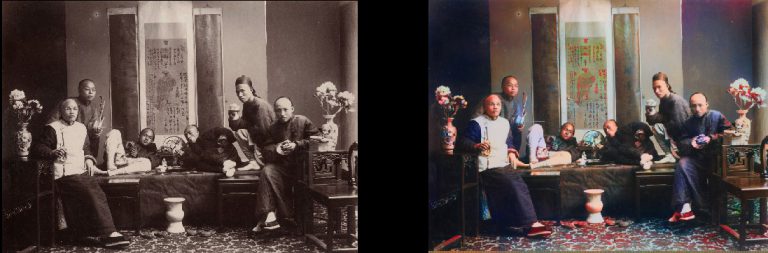

I’ve just discovered an incredibly cool project on Github: DeOldify, which uses deep learning to automatically colorize old black & white photos. It’s not perfect, but what it’s able to do is pretty amazing, and improving rapidly. In addition to ninja-level coding, author Jason Antic (@citnaj on Twitter) does a terrific job writing up how…